IGPP is excited to invite you to join its Virtual Seminar Series presentation featuring John W Miles postdoctoral fellow, Daniel Blatter. Dr. Blatter's talk, "Turn your gradient-descent inversion algorithm into a Bayesian sampler!" will be available via Zoom on Tuesday, May 25, 2021, starting at 12:00pm. The seminar will be hosted on Zoom link here (password = stochastic).

Date: Tuesday, May 25, 2021

Time: 12:00 pm, Pacific Time

Note: This meeting will be recorded. Please make sure that you are comfortable with this before registering.

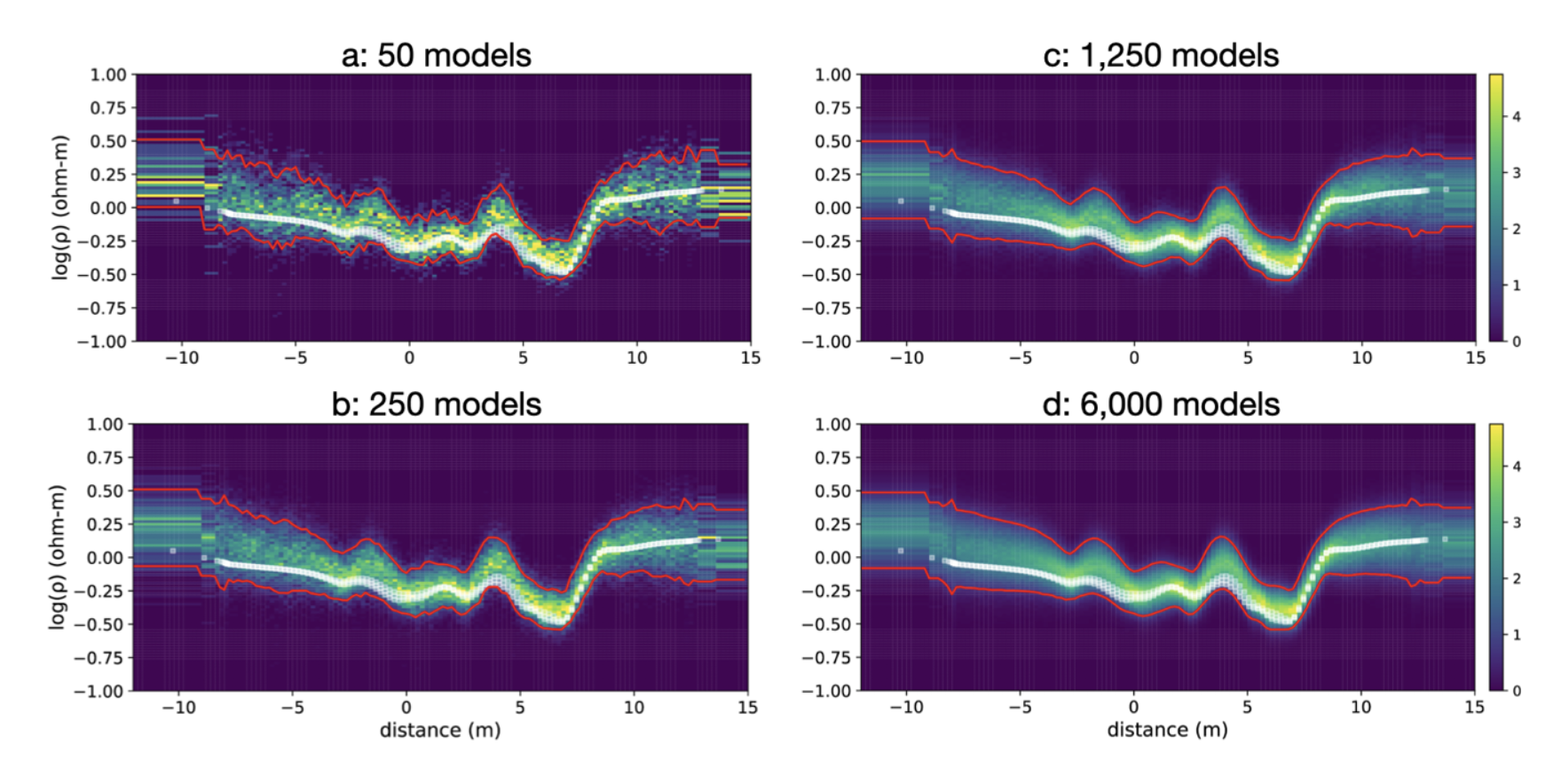

Abstract: In this talk, I will introduce stochastic optimization, an inversion method that produces quantitative uncertainty on inverted model parameters efficiently (in terms of both run time and total flops), even on large problems, and that makes use of existing, well understood linearized inversion algorithms. Stochastic optimization repurposes linearized, gradient descent optimization methods in a Bayesian context, transforming them into an efficient, Bayesian sampler. This is done by repeatedly solving a perturbed cost function using the data and prior model covariances. In this context, the data misfit term corresponds to the Bayesian likelihood, while the model regularization term corresponds to the Bayesian prior. Stochastic optimization is computationally efficient because, unlike other Bayesian inversion methods such as Markov chain Monte Carlo, it draws independent samples. This means far fewer samples need to be drawn to properly estimate the desired uncertainty. What’s more, these samples can be drawn in parallel, allowing high performance computing to greatly reduce the run time. I will illustrate the features of “randomize, then optimize” (RTO), our stochastic optimization algorithm, on simple 1D problems, then showcase its computational efficiency on a 2D magnetotelluric field data problem.